Ask Not What You Can Do For Your Container Build…

…Ask What Your Container Build Can Do For You

While learning about Docker containers it is common to start with a Dockerfile to specify the steps and dependencies required to build a Docker image. In this article, I am going to build docker images containing applications each using different technology - dotnet, node, and python.

The article will focus on two different ways of building the images:

- We describe exactly how to build an image using a Dockerfile

- We let the build process figure out how to build the image - no Dockerfile

My project structure is as follows:

We work for the container build process

When we really care about which base image should be used by our projects we create a Dockerfile manually and use that to build our images.

DotNet Core:

For the dotnet core console app project our dockerfile is a follows:

Here we define which base image to use, which in our case is the dotnet/core/runtime:3.1. Then we copy our published binary and define an entry point to use to run out app.

Before we can create a docker image we build the dotnet console project and publish it in the console folder:

Now we build and run the console image using the Dockerfile Dockerfile.dotnet:

Node:

For our Node project we use the following docker file:

We use the base image node:14.14.0-alpine3.12. We copy our package*.json files in the working directory and install all dependencies. In our case, we have a single dependency on express.js as we see below. We then copy all our files to the image and after exposing port 8080 we start our node app server.js.

Our package.json file is as follows:

The thing to note here is that we specify the node engine we use. This is extremely important for the next section of the article as we shall see later.

We build our Node image and run it using the Dockerfile.node:

Python:

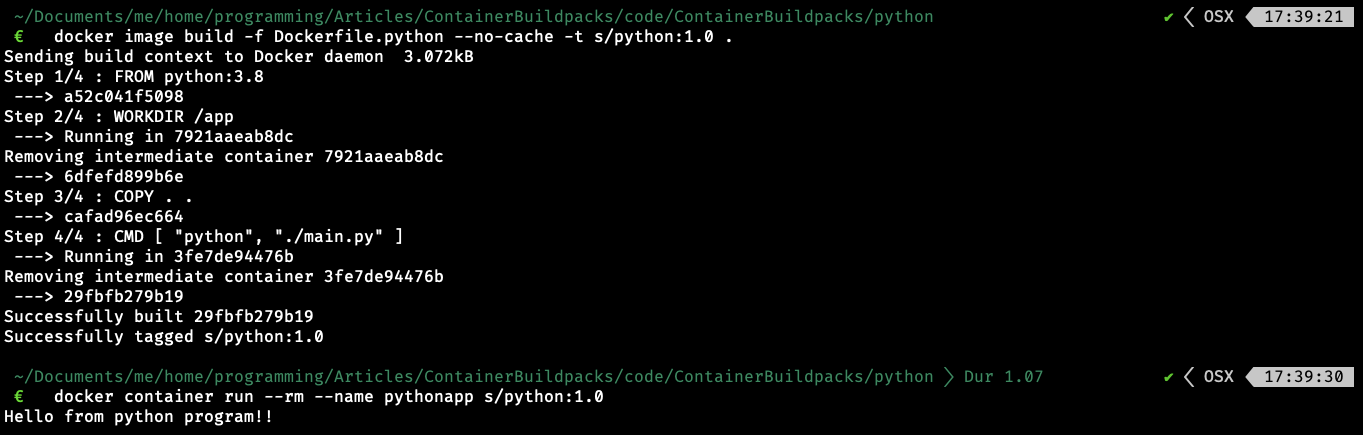

For our python project our Dockerfile looks like the following:

A very simple dockerfile using Python 3.8 base image, copying the main.py to the working directory, and starting it.

Building the python image and running it using the Dockerfile.python is as follows:

Everything that we have seen so far is a vanilla docker image creation process using Dockerfile. We specified exactly what dependencies we wanted to have and where to copy our files and how to start it.

In a cloud environment, we probably don’t care about what base image is being used. We just want something that takes our code and figures out what dependencies are required and how to start our application. We can do just that using Buildpacks.

The container build process working for us

According to the Cloud Native Computing Foundation:

“A buildpack is a unit of work that inspects your app source code and formulates a plan to build and run your application.”

It actually uses various ways of detecting how to build your source code and run your application but I am not going to go into details in this article. Here we are going to actually see how to use buildpacks to create an image without using a Dockerfile.

DotNet Core:

Once again we start by building our console app and publishing it. We then use the command line tool pack for Cloud Native Buildpacks to build our image. This is of course console:2.0:

On the first part of the window we see the command line:

pack build s/console:2.0 --builder gcr.io/buildpacks/builder:v1It uses the pack build command to create a s/console:2.0 image. Instead of using a Dockerfile it uses the google cloud builder gcr.io/buildpacks/builder:v1 which is itself an Ubuntu 18 base docker image providing buildpacks for DotNet, Go, Java, Node.js, and Python. As we’ll see, this is the only builder we need for all the rest of our projects.

One can see how it detects the version of .Net Core to use (it does this by looking at the .csproj file). It then builds the myconsole app and creates the docker image s/console:2.0.

We run the image in the usual way:

Node:

Similarly, for our Node project we run:

Note that it looks at our package.json to detect the node version to use.

Again the only command we had to run was:

pack build s/node:2.0 --builder gcr.io/buildpacks/builder:v1Running the image and connecting to localhost:

Python:

For Python, we do need to help the buildpack by providing the exact command to run when running our container. There are several ways to do this but we use the environment variable “way”:

pack build s/python:2.0 --builder gcr.io/buildpacks/builder:v1 --env GOOGLE_ENTRYPOINT="python main.py"And the output:

And running it:

That’s it!! We just created docker images for applications using three different technology without self-created Dockerfiles. How cool is that?! 😃

Conclusion

I have touched the surface of what Cloud Native Builders and Buildpacks are capable of and can’t wait to explore them further. I hope this introduction will make you curious enough to explore them as well.

There are various other builders one can use. The following command lists some of them:

We have used Google builders in our article.

You will find all the code for this article in my GitHub Repo.